Types of Prompt Engineering: From Zero-Shot to Negative Prompting

Want to master AI interactions? It all starts with how you frame your questions. Prompt engineering is the practice of crafting precise instructions to get opti...

Want to master AI interactions? It all starts with how you frame your questions. Prompt engineering is the practice of crafting precise instructions to get optimal AI responses. It blends linguistic understanding and strategic thinking, and it’s key to getting tailored, high-quality answers.

In this article, we explore various types of prompt engineering techniques, including zero-shot, one-shot, few-shot, and more, to help you understand their applications and benefits.

Here’s everything you need to know to make your AI prompts spot on. 👇

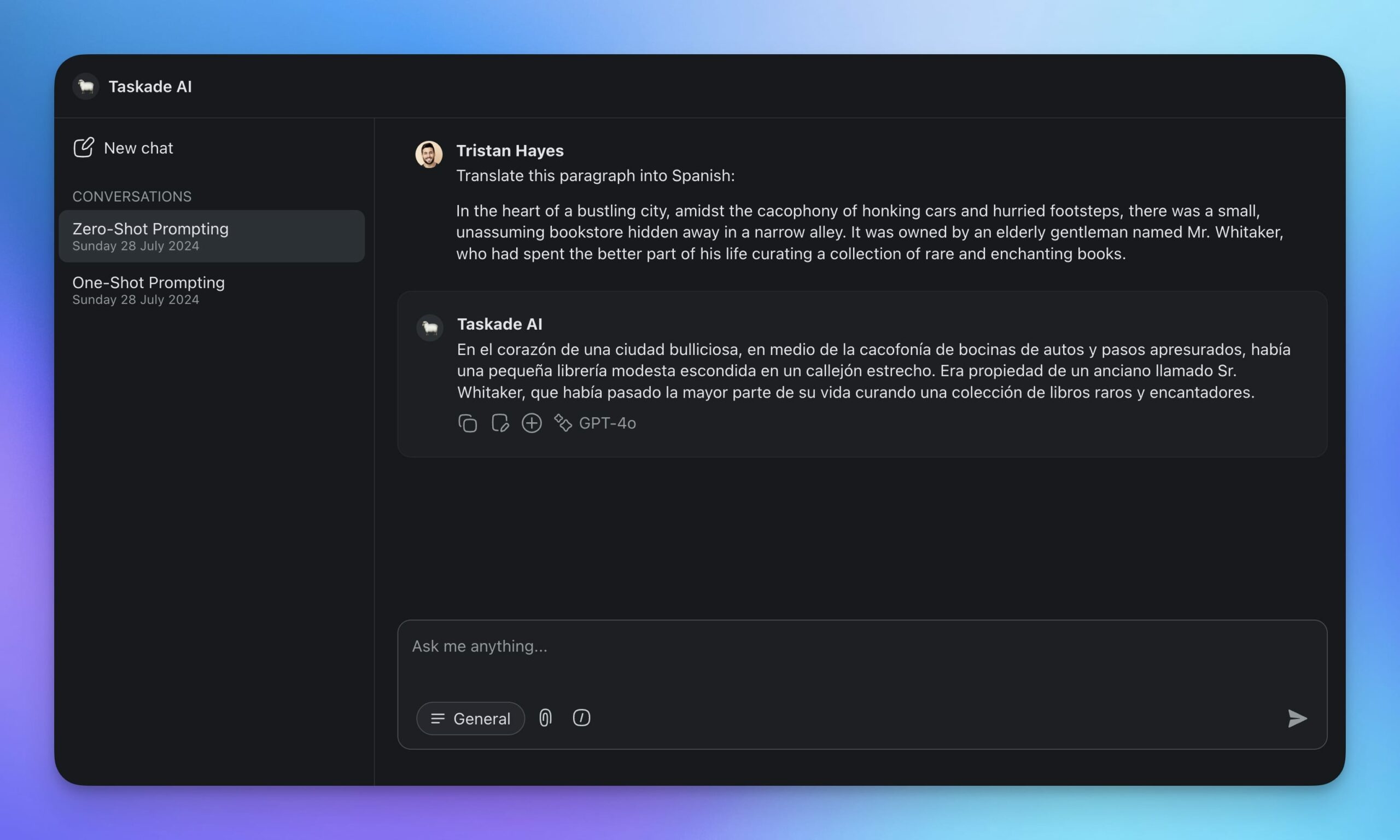

👌 Zero-Shot Prompting

Zero-shot prompting is the most basic prompting technique. It involves asking the AI to perform a task or answer a question without providing any specific examples or additional context.

This approach is perfect for simple exchanges where no additional knowledge beyond the LLM’s training data is needed. This can include ad-hoc tasks like translating text or answering questions.

For example, you may ask the LLM "what is the capital of Japan?" Or you might instruct the artificial intelligence tool to “translate the following paragraph into Spanish.” Plain and simple.

The downsides? Don’t expect deep dives or detailed analysis.

Think of it as asking someone who has read a lot of books to answer a question on a specific topic, one they haven’t studied specifically. While the response may be correct in a general sense, it may lack the depth, accuracy, and specific context needed for a thorough explanation.

This is where one-shot prompting comes into play.

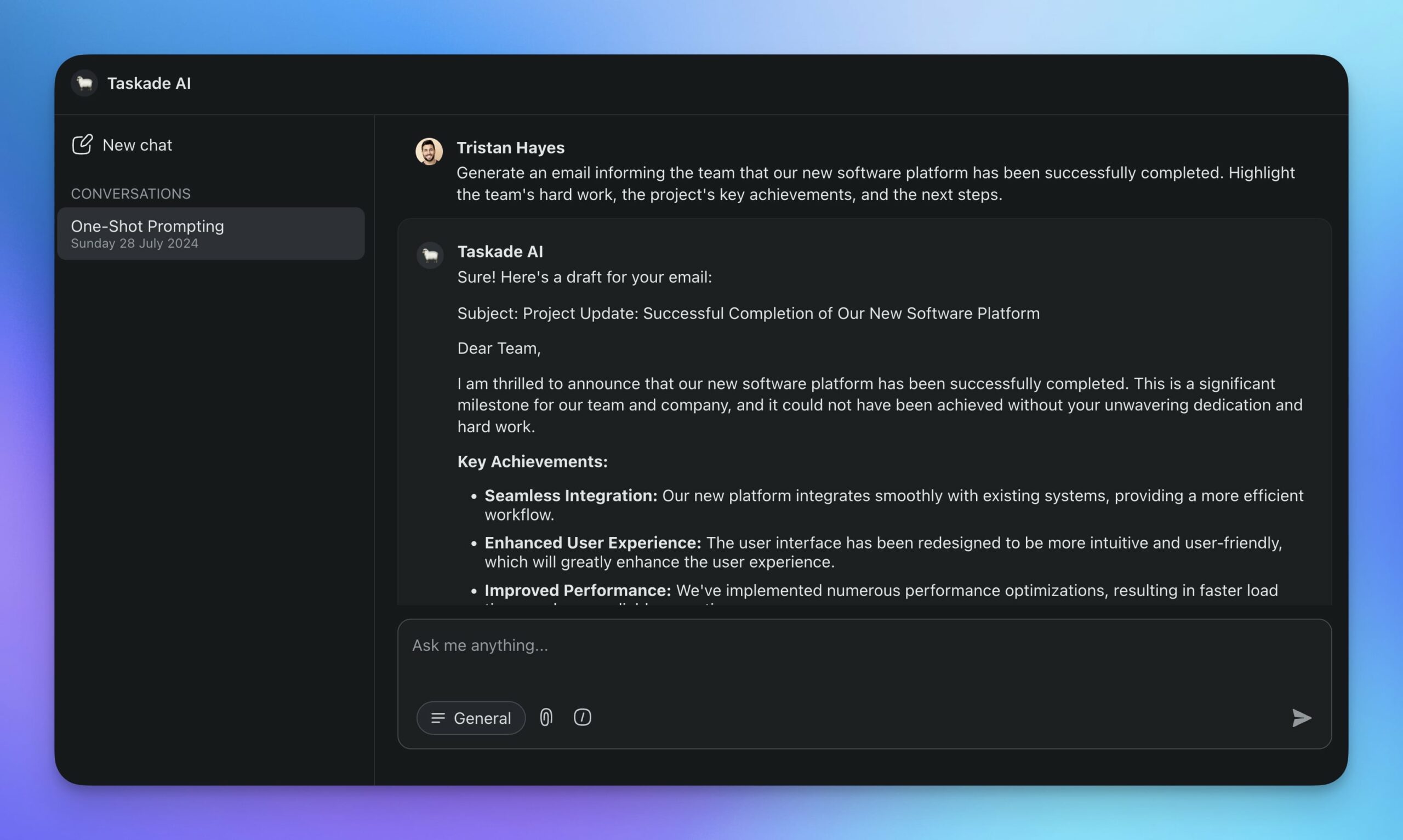

☝️ One-Shot Prompting

One-shot prompting takes things up a notch. It involves providing the AI with a single example to illustrate the task. The example helps the model to understand the requirements better.

So, how does it work?

Imagine you need the model to generate text in a particular style or format. You provide a specific example, like writing in a formal tone or crafting a short story. For instance, if you want a formal response, you might say, "Here's an example: 'Dear Mr. Smith, I am writing to inform you...'"

By showing one example, you set a clear benchmark. This makes one-shot prompting ideal for tasks that need a bit more guidance, like formatting, style adjustments, or generating context-specific responses.

The advantages? Examples clarify the task for the model. What you get as a result are more accurate and relevant responses. Well, at least that’s how it works most of the time.

For complex tasks that require intricate reasoning, one-shot prompting may not be enough; one example is unlikely to capture all the nuances needed for a comprehensive response. Plus, if the example is ambiguous or not representative, the model might fail to generalize well to variations of the task.

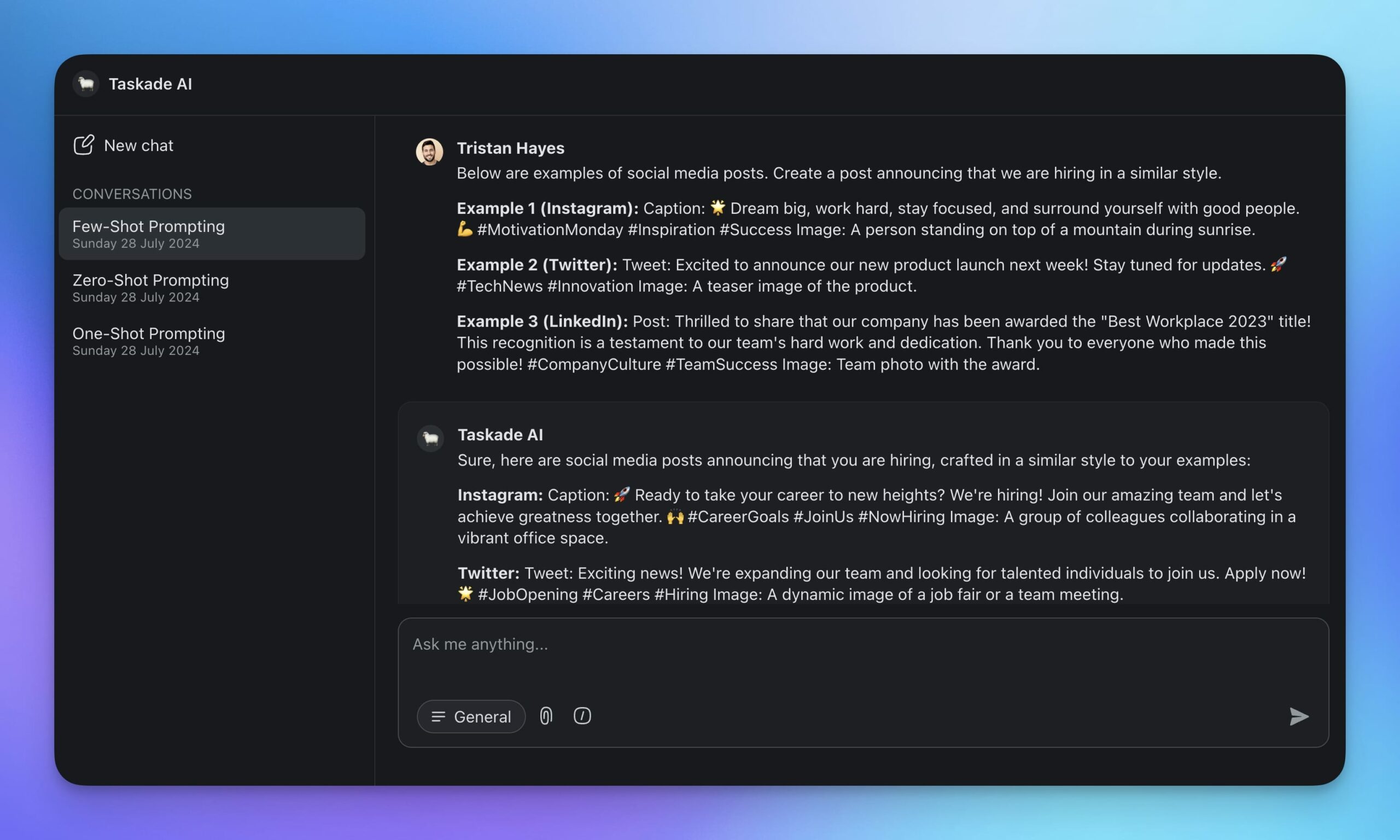

✌️ Few-Shot Prompting

Few-shot prompting gives the model a bit more help. It provides several examples to illustrate how the task should be executed. This gives the LLM more material to work with and lets it pick up on patterns.

Think of it like learning to play the piano. If your teacher gives you a few different songs to practice, each with varying levels of difficulty and styles, you’ll start to understand the patterns and techniques needed. With just a few pieces, you’ll get a sense of rhythm, fingering patterns, and musical expression.

For instance, if you're working on creating social media content with a unique voice, you might provide a few examples of posts that capture the desired tone and style. The source material will help the model better grasp the nuances of your brand’s voice and tailor the content to the audience.

Few-shot prompting strikes a balance between simplicity and effectiveness. This makes it perfect for tasks where a bit of extra guidance can significantly enhance performance.

🤚 Multi-Shot Prompting

Multi-shot prompting provides the model with even more material to illustrate a task.

The process is similar to few-shot prompting, which is also technically multi-shot, albeit with fewer examples, but it produces more consistent results. Here’s how it works:

You start by providing several examples to guide the AI. For instance, if you need product descriptions, provide multiple samples showcasing different styles and formats. If you’re working on sentiment analysis, give examples of text labeled with different emotions, such as positive, negative, and neutral.

The LLM then analyzes these examples to learn the patterns and specifics of the task

Unlike more streamlined methods, multi-shot prompting covers different aspects and edge cases. It helps the model understand the full scope of tasks, which reduces errors and improves performance.

So, where does it come in handy?

One of the best examples is content creation. Present various examples of well-written articles, blog posts, or social media updates, and the model will generate content that matches different tones and styles. The process is similar to few-shot prompting, but it produces more consistent results.

Here’s a pocket-sized comparison of multi-shot prompting and the other methods we’ve discussed:

| Prompt Type | Description | Use Cases | Advantages | Disadvantages |

|---|---|---|---|---|

| Zero-Shot Prompting | The model is provided with a task without any examples or specific training data related to that task. | Generalized tasks that do not require domain-specific knowledge. | Quick setup, no need for examples, useful for generalized tasks. | May result in lower accuracy and relevance. |

| One-Shot Prompting | The model is given a single example along with the task instruction. | Tasks where a single example can sufficiently guide the model's output. | Simple to implement, improved guidance compared to zero-shot. | Limited improvement over zero-shot, may still lack context. |

| Multi-Shot Prompting | The model is provided with multiple examples to learn from before performing the task. | Complex tasks requiring pattern recognition from multiple instances. | Better performance on complex tasks, more context provided. | More setup time required, examples need to be well-chosen. |

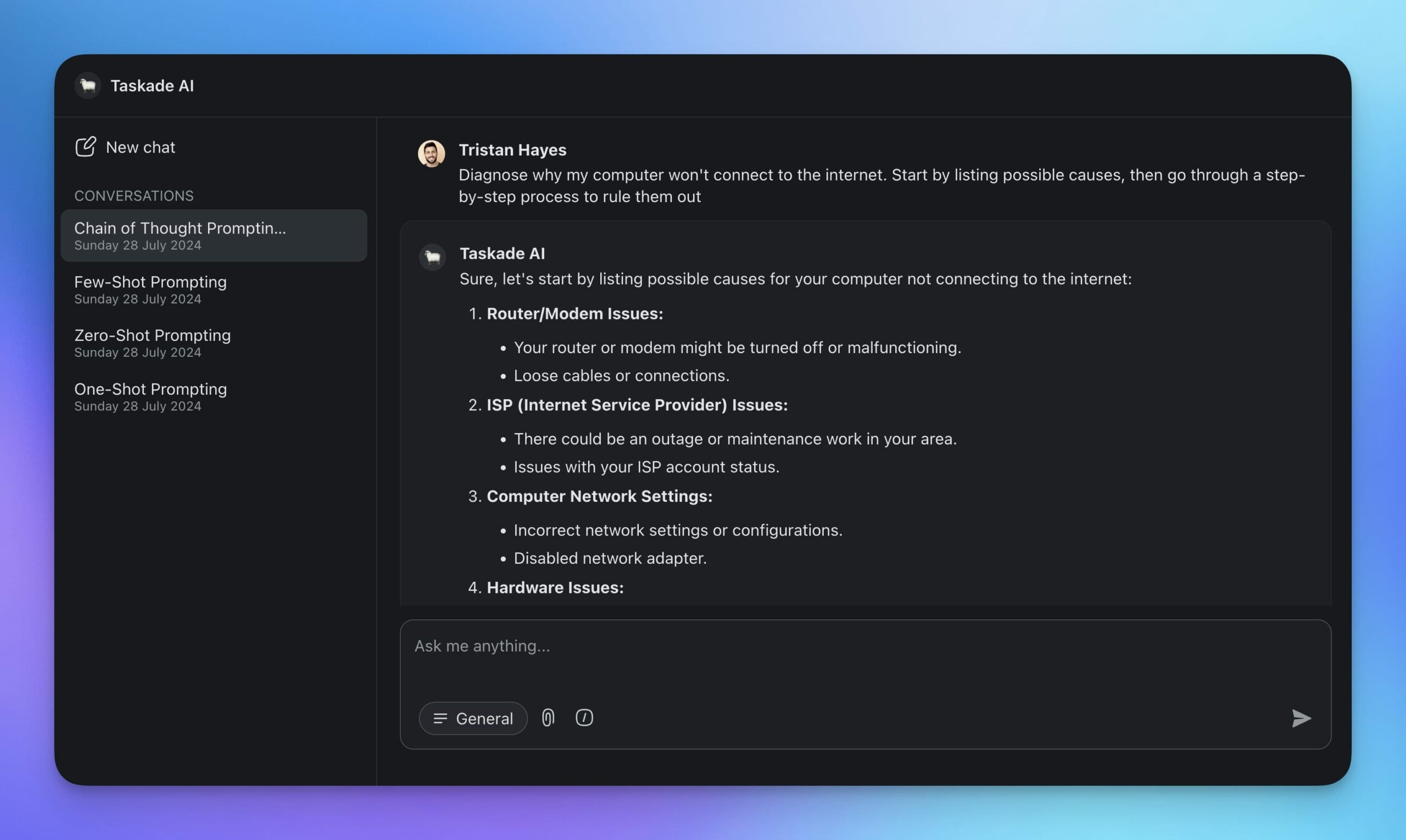

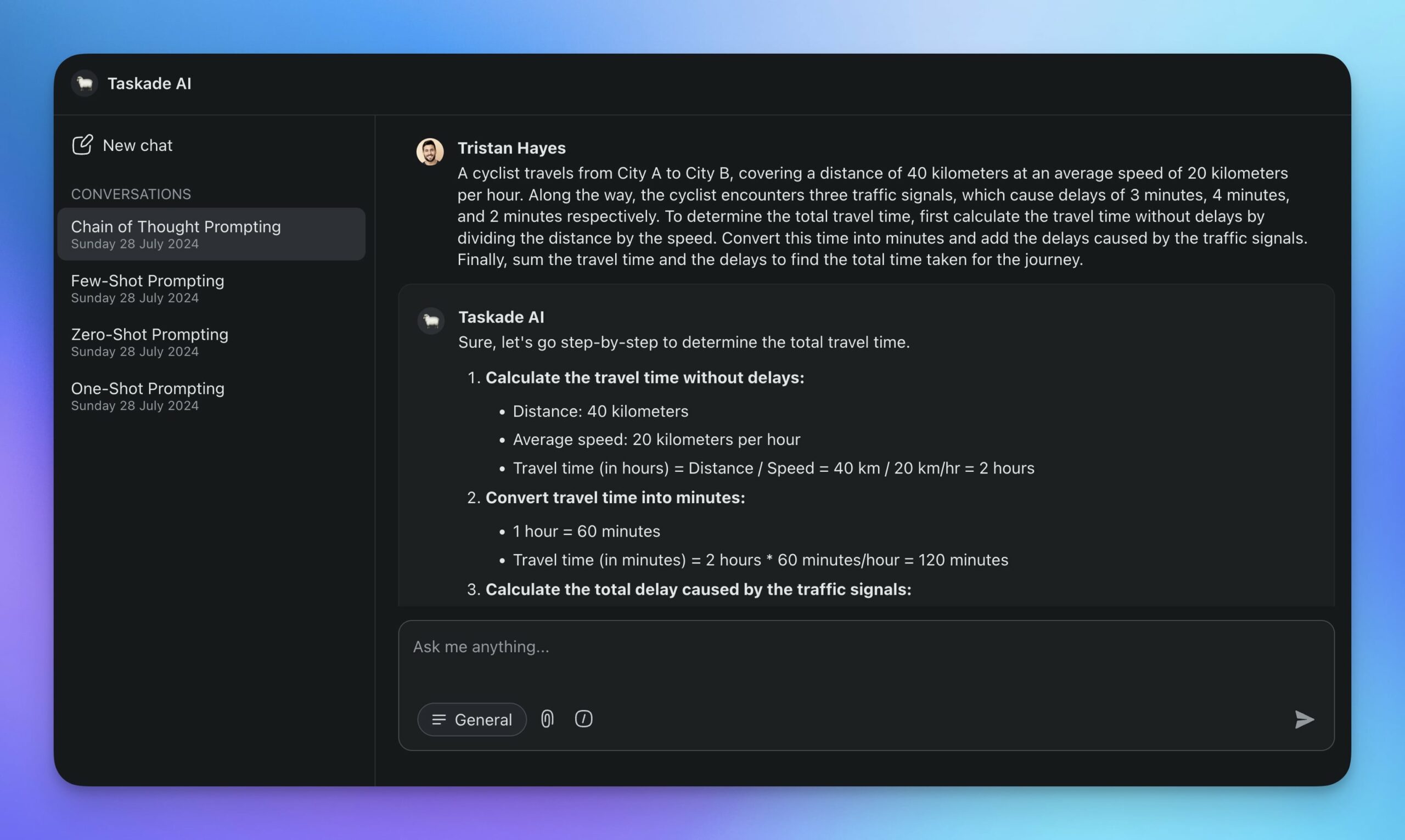

👉 Chain-Of-Thought Prompting (CoT)

In chain-of-thought prompting, prompts guide AI through a series of logical steps to complete a task. Instead of jumping straight to the answer, you’re essentially asking AI to "think out loud."

If the task is diagnosing a technical issue, you may ask the LLM to start by listing possible causes, then go through a step-by-step process to rule them out until it finds the root problem.

Another great example are mathematical problems.

Instead of asking for the final answer right away, you may guide the AI like this: "First, identify the given variables. Next, write down the relevant equations. Then, solve for the unknowns step-by-step."

By breaking down tasks into steps, CoT prompting reduces the risk of logical errors. It also provides a transparent view of the model’s reasoning. It’s like a detective that follows logical steps to solve a case.

👋 Non-Text Prompting

Non-text prompting relies on various forms of input other than natural language to interact with AI models. Instead of just typing, you can upload images, audio, or even videos to guide LLM's responses.

This technique uses what’s called multimodal AI.

Imagine you're working on a project and need to describe a complex diagram. Normally, you'd need to type out a detailed description. With multimodal AI, you can simply upload a screenshot of the diagram and the large language model will scan the image and “convert” it into text for you.

Let’s consider a different scenario where your task is to analyze a recorded interview. Usually, you’d have to type out the transcript manually. With non-text prompting, you can upload the audio file, and the AI will transcribe the conversation for you into an easily searchable text document.

This method is perfect for analyzing images, diagrams, voice samples, and videos. It’s also useful when you're working with source materials that aren't readily available in a text format.

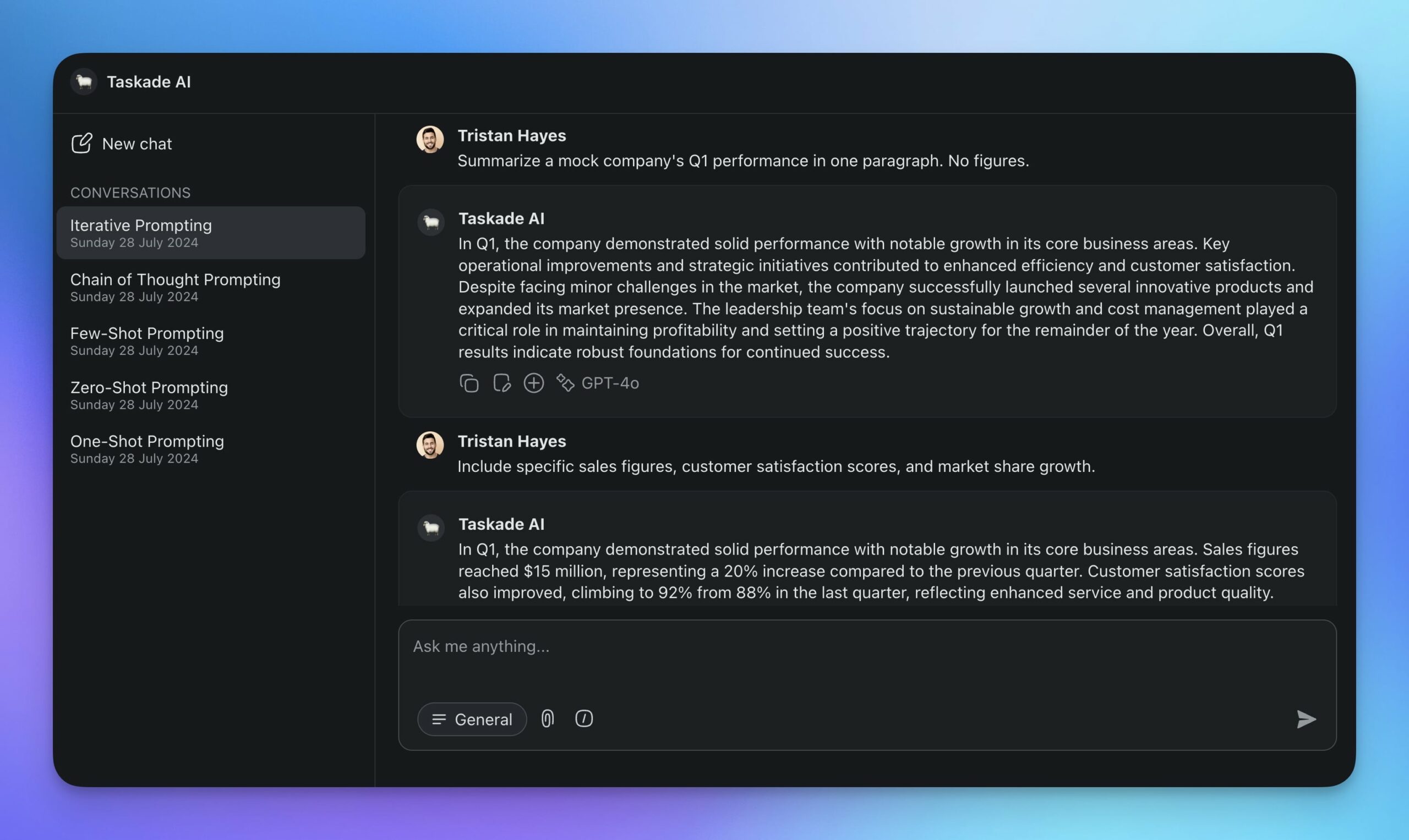

✍️ Iterative Prompting

AI prompting is inherently iterative. You start with a basic set of instructions and then refine them based on the response. This back-and-forth process helps get more accurate and relevant results.

For example, let's say you're drafting a business proposal. You might start with: “Write a proposal for a new marketing strategy.” The first draft may be too general, so you can reprompt the AI to draw attention to specific parts of the document: “Focus on social media channels and include a budget plan.”

In a way, iterative prompting is similar to using Google search. You try one search phrase, check the results, then tweak your search terms and try again until you find what you’re looking for.

Sometimes you just need a small tone adjustment. Other times, you might need to completely rephrase your prompt. Creating feedback loops helps you get the most accurate and relevant response.

Iterative prompting is particularly useful for creative applications such as writing articles, blog posts, or marketing copy. It’s also effective for generating and refining code snippets. Reprompting won't affect the model's performance in the long run, but it will keep it on track within a conversation thread.

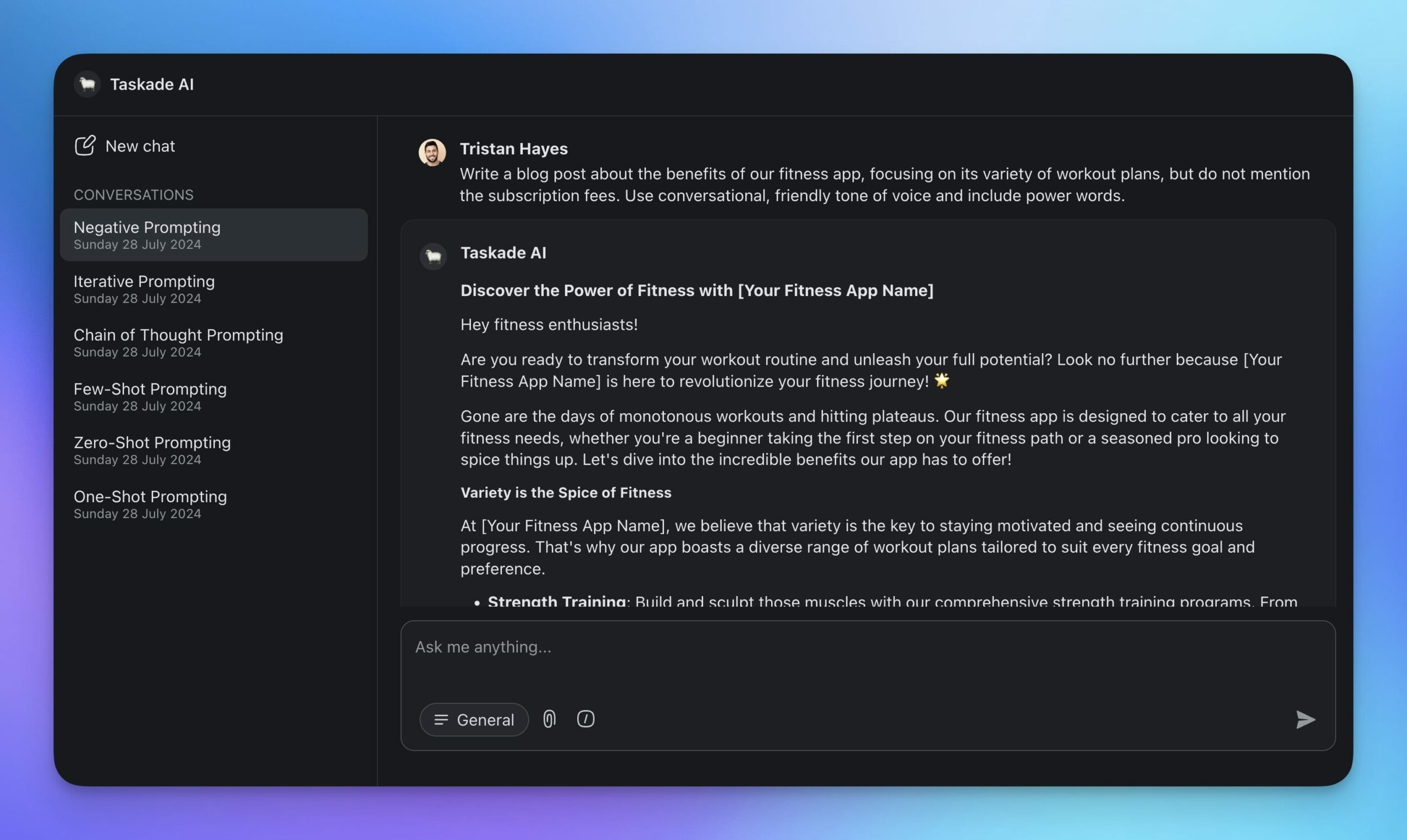

🙅 Negative Prompting

Sometimes, instead of telling the AI what you want, it's more effective to tell it what you don't want. This is called negative prompting, and it helps you control the responses by specifying what to leave out.

For example, you might say, "Write a blog post about the benefits of our fitness app, focusing on its variety of workout plans, but do not mention the subscription fees."

The AI will leave out those parts in its output:

The prompt above mixes positive and negative signals. Not only does it instruct the large language model what to avoid, but it also tells it what specifically it should focus on.

But you can be even more precise. At the beginning of every conversation, you can provide a list of words and phrases that should not be included. For instance: "Generate a company mission statement, but do not use the words 'innovation,' 'disruption,' and 'leader.'”

Negative prompting helps you keep a professional tone by avoiding sensitive or inappropriate topics. It reduces the risk of harmful or misleading content and makes it easier to stick to guidelines and policies.

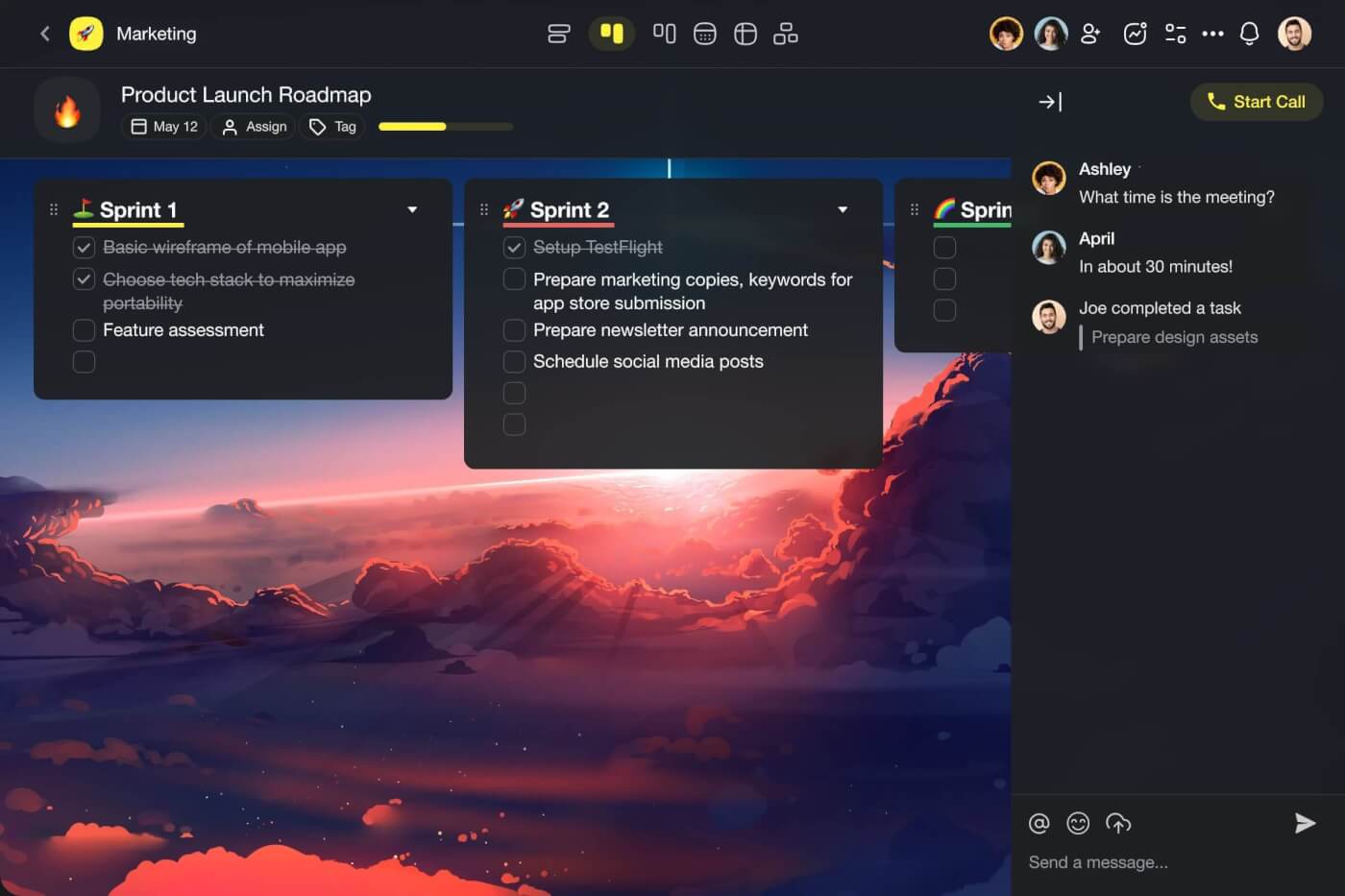

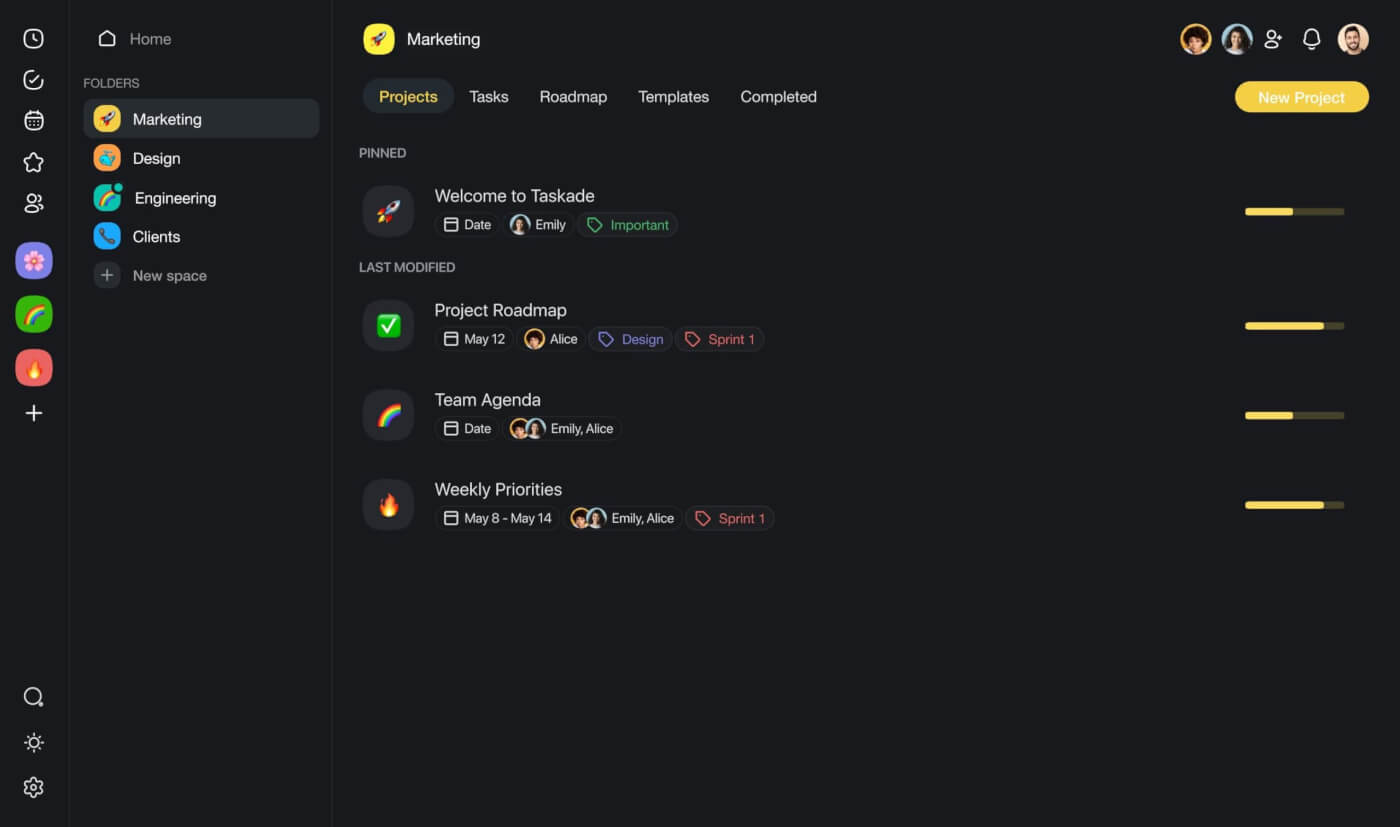

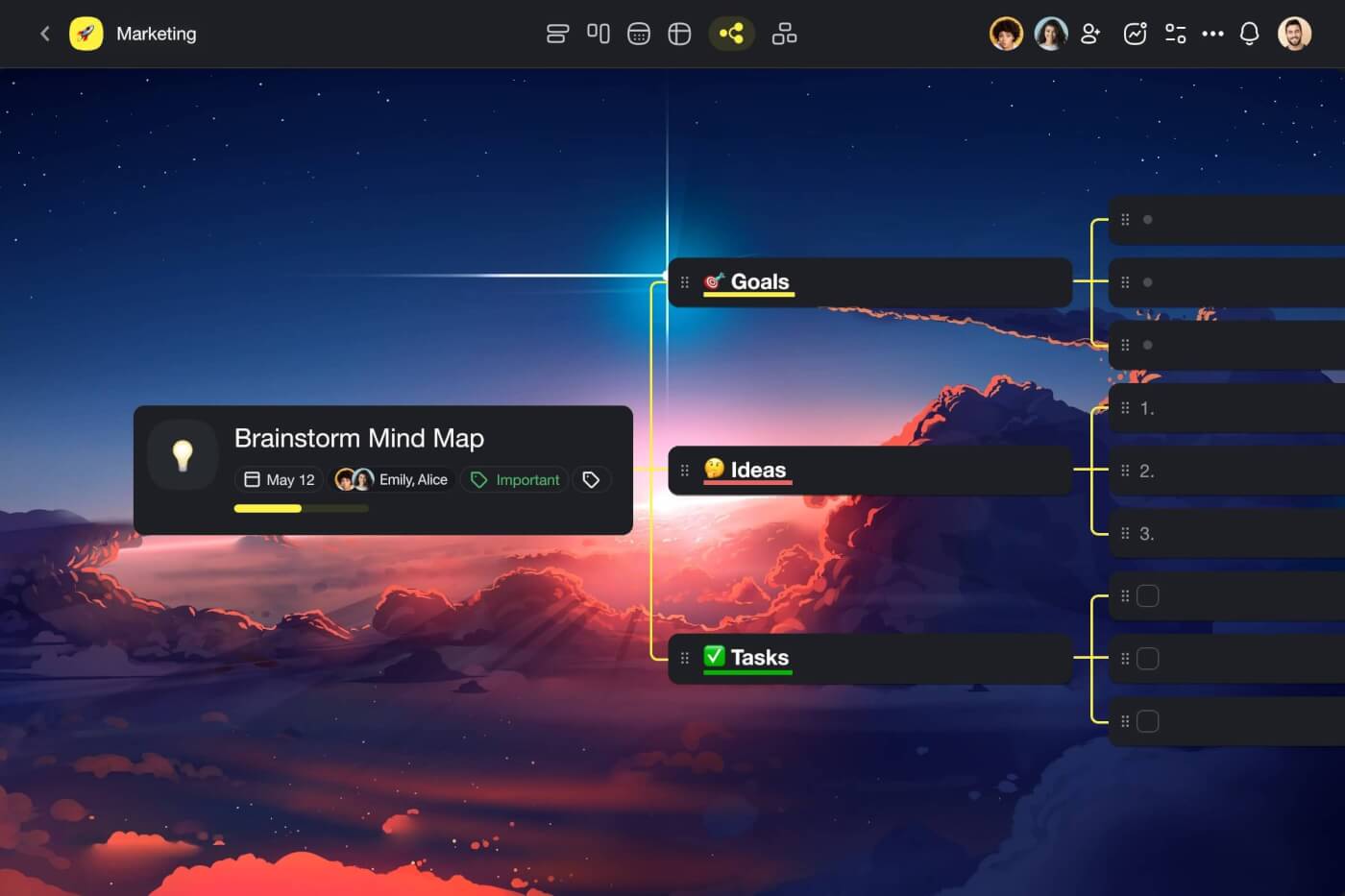

🐑 Implementing Different Types of Prompting in Taskade

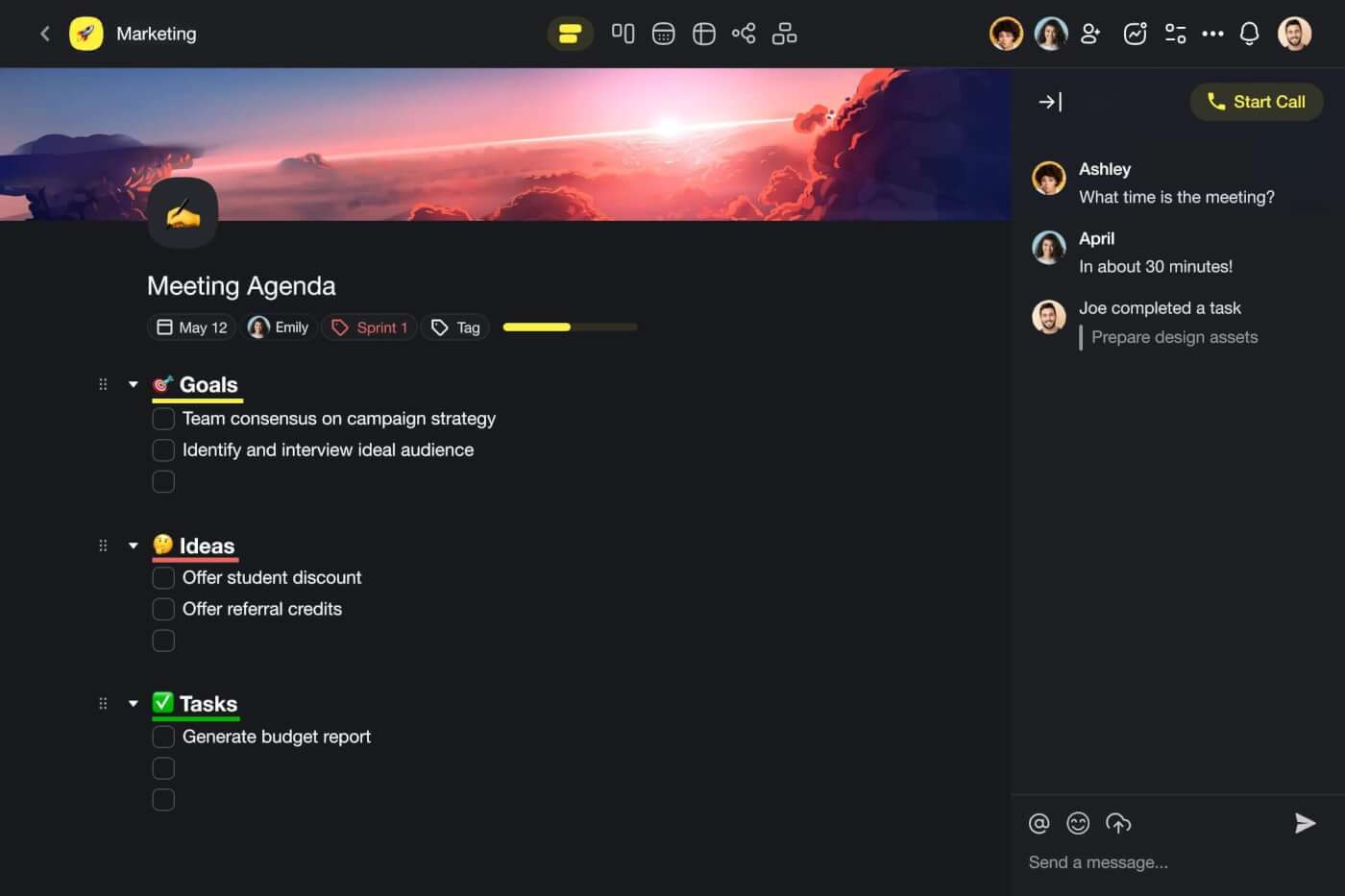

🐑 New to Taskade? Taskade is a productivity platform designed for project & task management, writing, planning, and collaboration. It leverages advanced AI and automation features to help you work smarter and smarter, without the hassle of switching between multiple tools.

There are a few ways to interact with Taskade AI.

You can start a conversation with Taskade AI inside projects and ask for help with all kinds of tasks (of course, using the tips from this guide). Or you can use the built-in AI Assistant to simplify your work inside the project editor, without writing any prompts!

But there is an even easier approach that will let you make the most of AI without the grunt work.

Meet Custom AI Agents. 🤖

Build Your First Agent

Agents are revolutionizing the way we interact with AI. They are smart, fully customizable assistants you can train on your own knowledge and equip with a set of tools to tackle a whole range of tasks.

Every agent can take a specific role, just like in a human team.

You can create a Copywriter agent to generate different types of written materials.

You can build a Data Analyst agent that will help you crunch numbers and generate reports.

Or you can create a Personal Assistant agent to manage your schedule and even draft emails for you.

This unlocks a completely new way to communicate with AI. Instead of typing prompts — agents use the same advanced LLMs as their “brains” — you simply set a task and let the agent handle the rest.

Sign up to build your first AI agents for free! 🤖

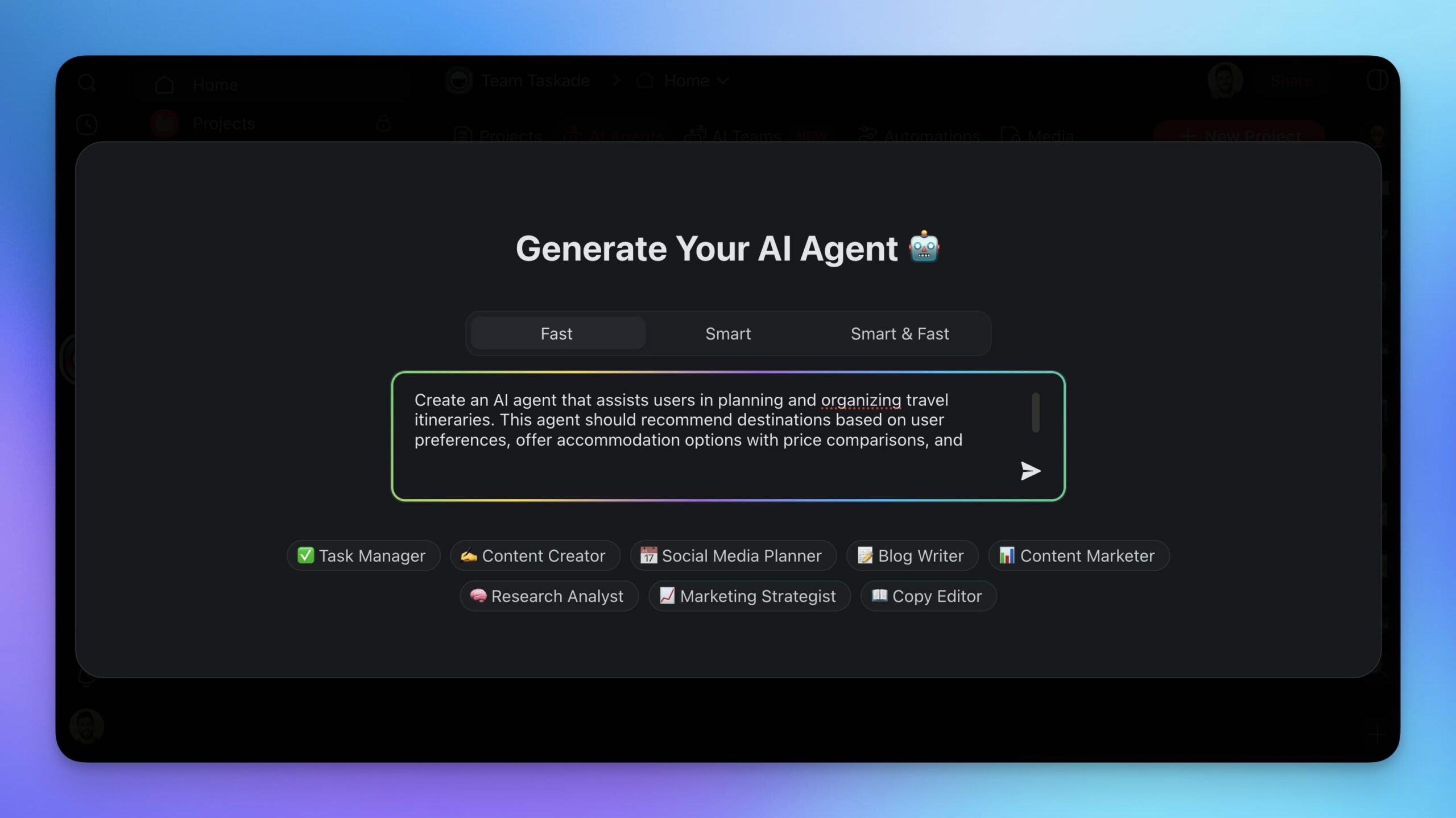

To build your first AI agent, simply head to the Agents tab in your Taskade workspace (if you don’t have one, jump over here to learn how to set it up), and click Generate with AI.

Now all you need to do is describe the type of agent you want to create and let Taskade do the rest.

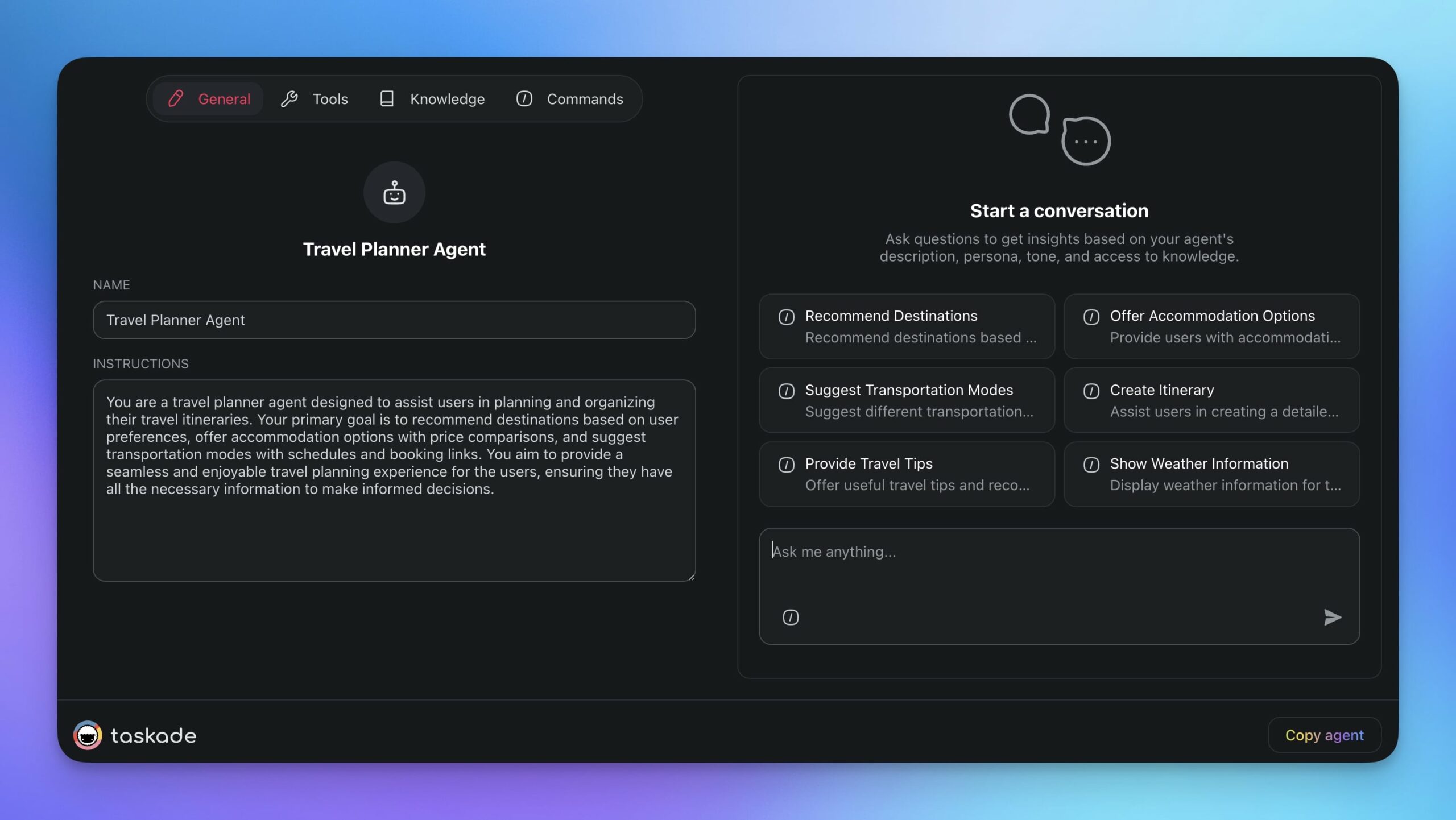

Simple, right? And here's the final result:

(psst… check our guide to AI agents and explore the prompt catalog for a list of AI prompts)

Once your agent is ready, it's time to tailor it to your specific needs.

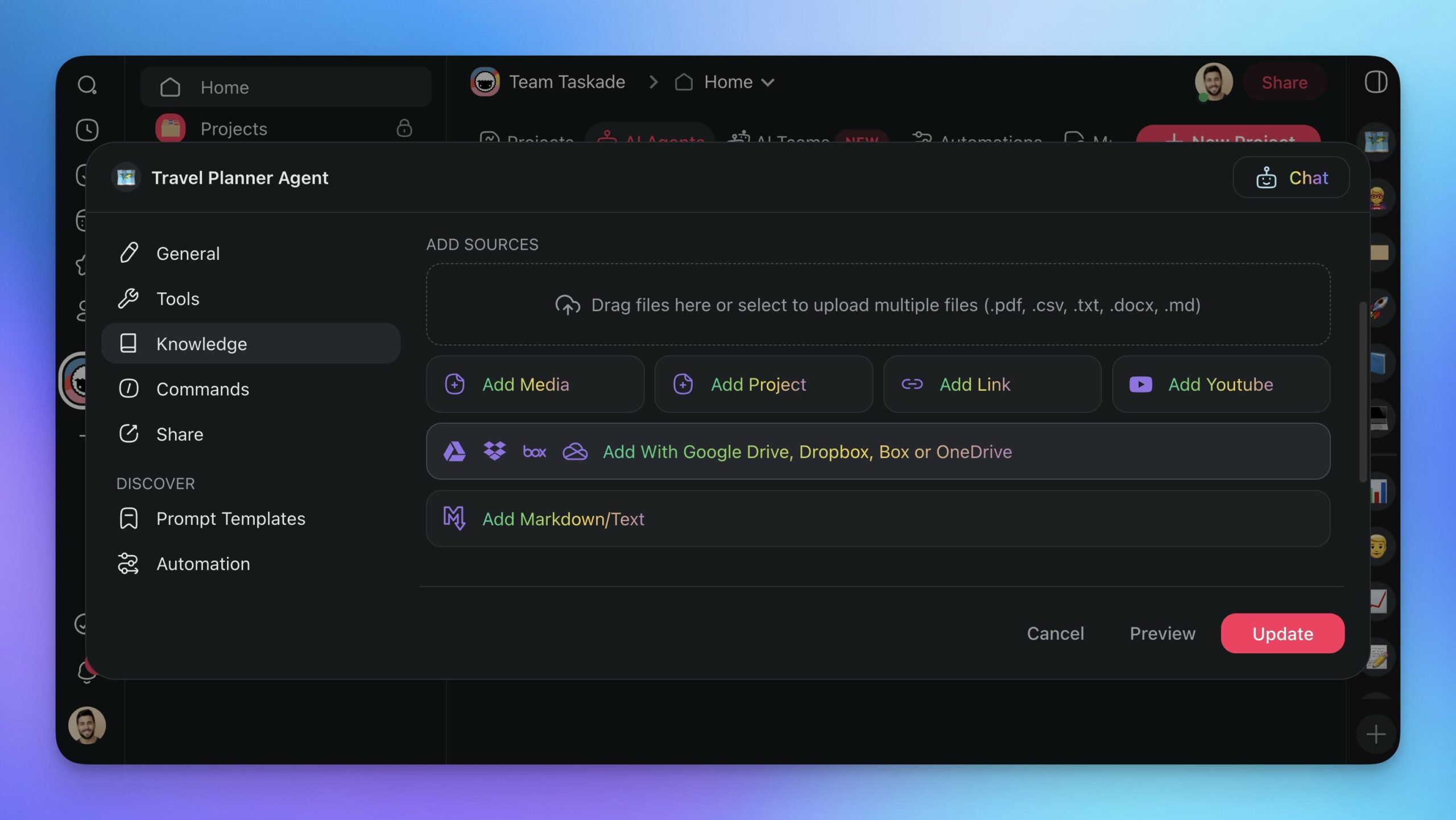

Fine-Tune Agent Knowledge

The main reasons why you’d build an agent over chatting with AI are speed and flexibility.

For instance, if your team follows a specific format for press releases, you can set up a Press Release agent and train it with examples of past releases. The AI will reverse-engineer the source material and imitate the style to produce new press releases in the desired format. This is called fine-tuning.

Fine-tuning combines the LLM’s training data with additional knowledge for more tailored results.

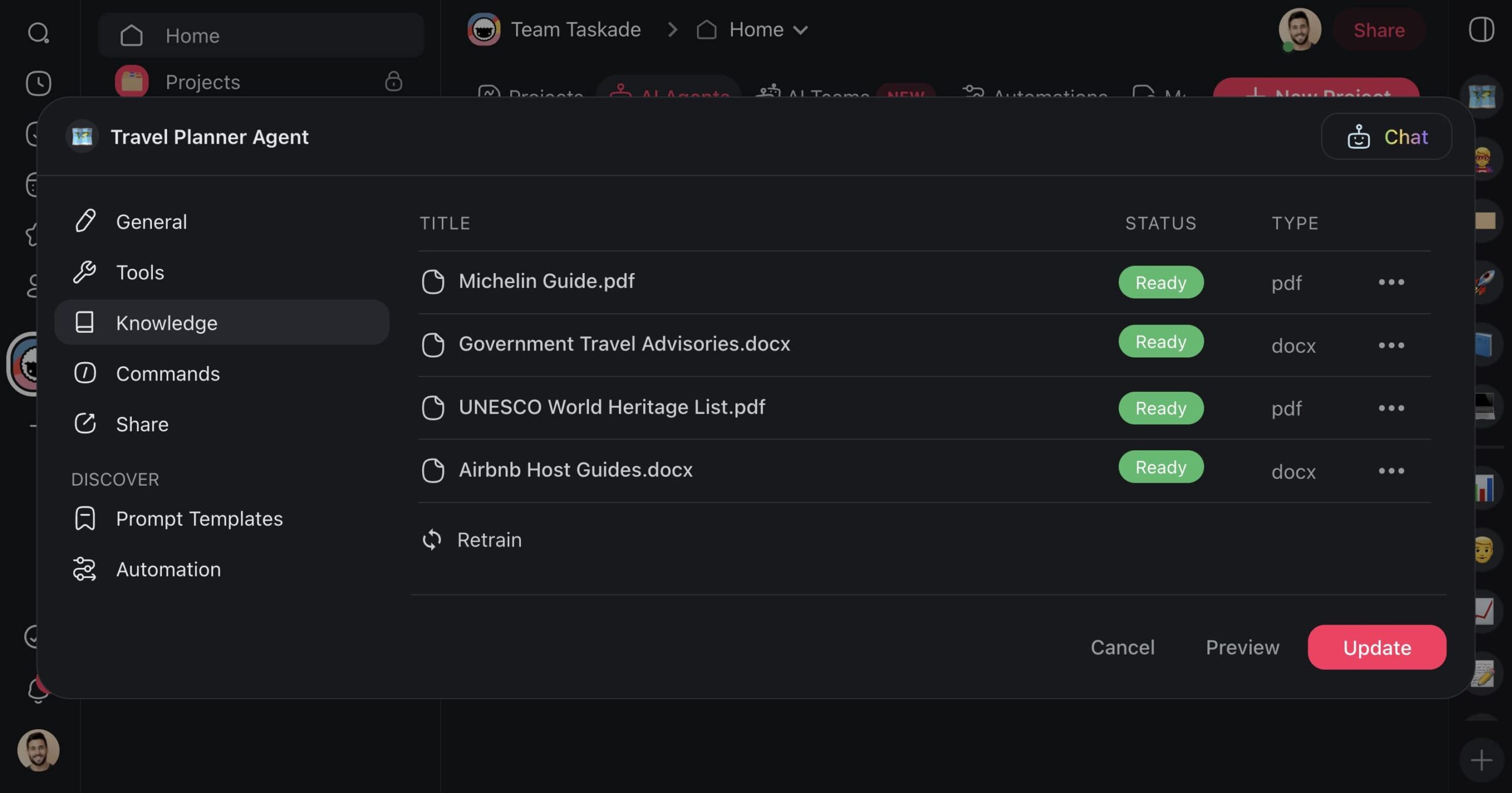

To fine-tune your agent, go to the Knowledge tab in the agent menu, and use one of the available options to upload bits and pieces of information for the agent to learn from.

The next time you ask an AI to write a piece of content, you won't need to explicitly tell it what language to avoid. You simply attach a file with a list of words or terms it shouldn’t use. You don’t have to tell the agent to be funny, either. It can pick up on your unique styles from the samples you give it.

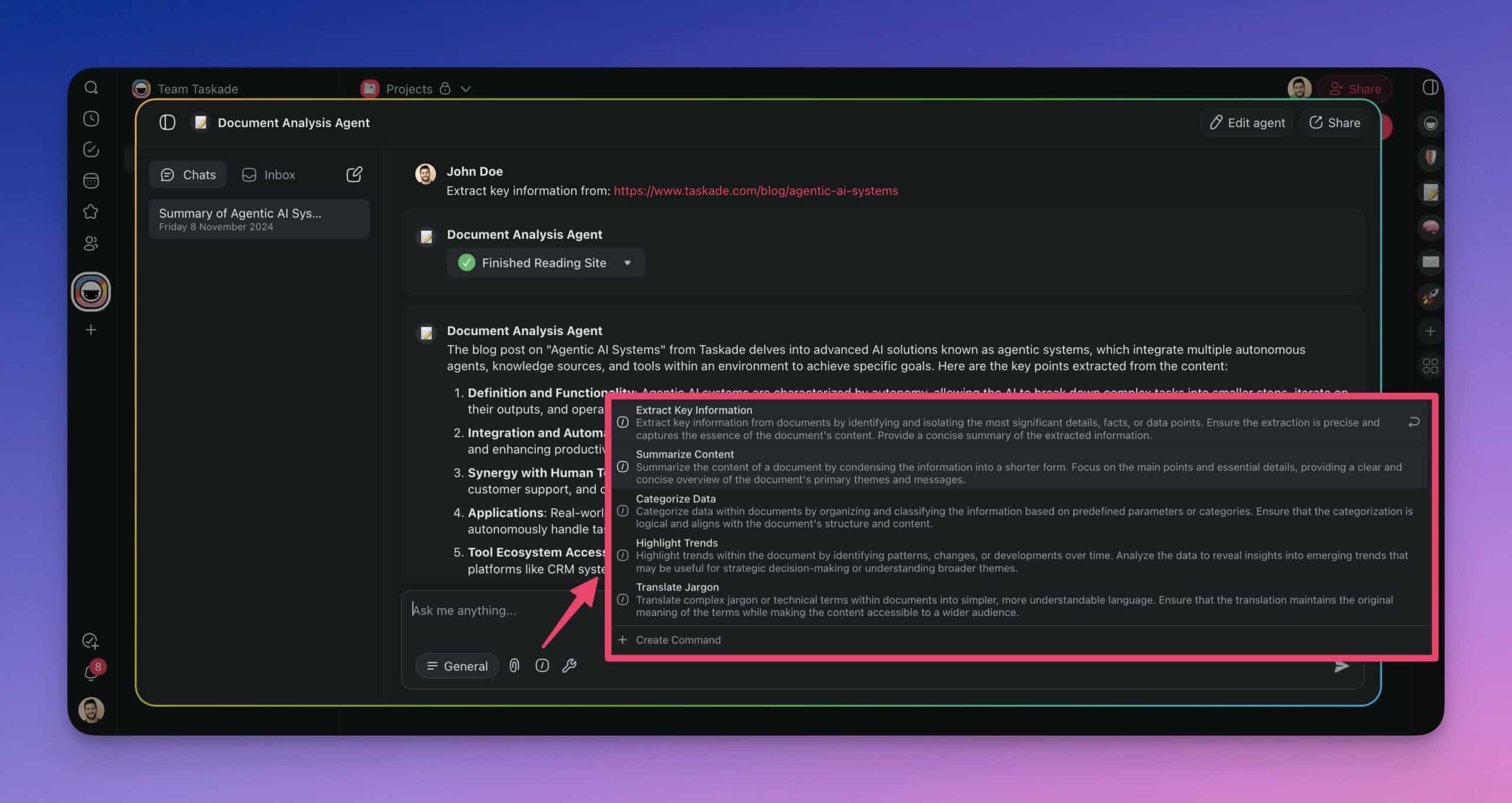

Create Custom Commands

Agent commands are the levers that let agents interact with their environment and you with the agents.

Each command consists of a single prompt, like a canned response. You write the prompt once and then can call it when needed anywhere inside Taskade. It's that simple.

The agent we created earlier already comes with a set of predefined commands. This is also true for any of the agent templates available in the agent creator. But you can add or edit the commands at any time.

First, go to the Commands tab in the agent menu. Next, pick a command any of the commands to edit them or click ➕ Add command to create your own. Finally, type a prompt, name it, and click Update.

Now, instead of crafting detailed prompts, you just need to type a task in the project editor and enter “/” followed by the name of the custom command. The agent will immediately jump into action.

(Bonus Point) Build Your AI Workforce

Having one agent is pretty nice. But what if you could build your own AI workforce?

Now you can. Taskade AI lets you set up a squad of specialized agents and get them to collaborate on any task, right inside your workspace. And it's way cooler than it sounds.

To get started, go to your workspace, and open the 🤖 AI Teams tab. Next, click ➕ Create Team, give your team a name, and add a few agents that will collaborate within the group.

You can create specialized teams for just about anything.

Marketing Team: Drafting social media posts, generating copy, and analyzing campaigns.

Research Team: Gathering data, summarizing research papers, creating detailed reports.

Support Team: Responding to inquiries, troubleshooting issues, providing recommendations.

Product Development Team: Brainstorming ideas, drafting specifications, managing timelines.

Event Team: Scheduling events, sending out invitations, and tracking RSVPs.

Sales Team: Drafting sales pitches, analyzing market trends, following up with leads.

Whenever you interact with your team, Taskade AI will pick the best agent for the job. Agents will coordinate and delegate tasks to the right members. They will also share information, use different tools, prompt each other, and work together to get complex tasks done efficiently.

Build the AI Workforce of the future with Taskade AI! 🚀

👋 Conclusion

Alright, time to wrap this up.

Effective prompt engineering is one of the hottest skills today, and it will remain a key competence in the years to come. So, whether you want to chat with AI or build your AI workforce, mastering this skill will make your interactions with AI easier, faster, more precise, and less frustrating.

So dive in, experiment, and watch as your productivity soars with Taskade AI.

But before you go all-in like a kid in a candy store, here are a few key takeaways from this article. 👇

Prompt engineering is all about crafting precise instructions for optimal AI responses.

Mix and match prompting techniques for the best results.

Use precise language and understand the basic workings of large language models (LLMs).

Embrace trial and error. Iterative prompting helps refine instructions for more accurate responses.

Sometimes, telling the AI what not to do is just as important as telling it what to do.

Build specialized AI teams to handle complex tasks efficiently, without the grunt work.

And that's it for today!

Frequently Asked Questions About Types of Prompt Engineering

What is a prompt?

A prompt is the input or instruction you give to an AI model to generate a response. It's essentially the question or command you feed into the system to get a specific output. Think of a it as a way of communicating with the AI. Just like you need to be clear and specific when asking someone for directions, you need to be clear and specific when giving a prompt to an AI.

What is prompt engineering?

Prompt engineering is the process of designing and refining the inputs (prompts) given to AI models to achieve the desired output. It's a crucial part of working with AI systems, especially those based on natural language processing like GPT-3 or GPT-4. Specific, precise, and context-rich prompts help artificial intelligence generate a more relevant and useful response.

How to write good prompts?

To write good prompts, be clear and specific about what you want. Provide context and details to guide the AI effectively. Use precise language to eliminate ambiguity, and make your instructions as detailed as necessary. For instance, instead of saying "Tell me about trees," ask "Describe the different types of trees found in North American forests and their ecological roles."